Prior to embarking on a full-scale in-depth inspection into all the technical aspects of your website, there are several free tools that can help you identify critical issues on your site. This free SEO audit tool kit uncovers common concerns that don’t require high-level technical SEO expertise to fix and can lead to major improvements in your website’s performance and user experience. Taking preemptive measures to identify and resolve these low-effort/high-yield priority technical items, prior to enlisting the help of an SEO expert for deeper analysis, is highly recommended for all webmasters and digital marketers.

Our light technical SEO audit checklist covers search engine visibility, user experience (UX) considerations, mobile compatibility, website crawl data, core web vitals and structured data. Have we lost you already? Never fear. We’ll break it down for you with this quick and easy-to-follow list of steps for your next SEO website audit:

1. Validate your pages are indexed correctly within search engines.

The very first thing to ask yourself is: “Are critical pages of my website getting indexed appropriately within search engines?” While we can’t emphasize enough how important it is to clean up your indexing strategy, this aspect of page SEO is the easiest and most overlooked priority area to focus on first. In a nutshell, if Google isn’t displaying your pages correctly, you intended audience will have an even more difficult time discovering you.

Advanced Search Commands

Perform an advanced search command, such as “site:yourdomain.com” to see how many pages are indexed in Google and how they’re displayed. Example: “site:teamlewis.com” If nothing shows up within SERPs then either your site is new and needs to be submitted to Google or you may potentially have it blocked via ‘nofollow,noindex’ tags or your robots.txt file. Or you may find several historic pages that you no longer want indexed that need to be disabled and redirected.

XML Sitemap

Every website should have an active XML sitemap. This helps inform Google what pages you want crawled and indexed on your website. Typically, this will be located at the following URL: (yourwebsite.com)/sitemap.xml. If you don’t have an XML sitemap, utilize an SEO tool such as the Yoast SEO plugin, to generate one for your site.

Robots.txt

In July 2019, Google announced that the Robots Exclusion Protocol was working towards becoming an internet standard. This file informs search engine crawlers which pages or files the crawler can or can’t request from your site. You want to make sure you’re not accidentally blocking all pages on your website via your Robots file. Bonus points if your file identifies your XML sitemap. The location of this file is generally located at: (yourwebsite.com)/robots.txt.

2. Leverage Google Search Console tools and reports for insights on how to enhance your site’s performance within this powerful search engine.

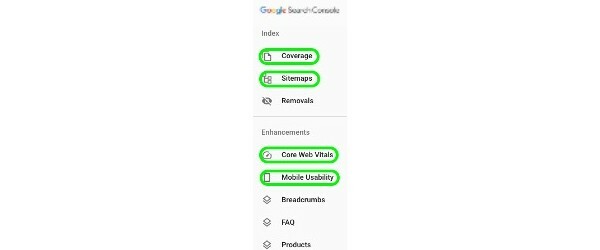

Google will alert you of several fundamental SEO errors or issues prohibiting your site from optimal visibility. You’ll first want to ensure you’ve allowed verified access to your web property. While there are several insights that can be found here, we recommend looking into the following insights first:

Index Report

- Coverage: The Coverage report alerts you about issues that might be preventing Google from crawling and indexing pages.

- Sitemap: This is where you can submit your XML sitemap to Google and find out if your intended pages are discovered successfully.

Enhancements Report

- Core Web Vitals: This is Google’s health check into how your pages are performing within the search engine pertaining to site speed, interactivity and layout.

- Mobile Usability: Google announced mobile-first indexing for the whole web starting in September 2020. This means Google will predominantly use the mobile version of the content as an indexing and ranking factor. With mobile accounting for approximately half of web traffic worldwide, it’s imperative to ensure your site is mobile friendly.

3. Perform a crawl of your website to identify and export key technical data.

There’s an easy way to pull a snapshot of all your pages along with the metadata elements associated with them. In doing so, you can uncover several critical items like title tags, meta description, meta tags, canonicals and image alt tags that are missing, duplicate, long/short or non-relevant that will help drive your SEO strategy. Once you’ve identified an SEO issue, check out our SEO best practices for optimizing metadata.

- Screaming Frog: The Screaming Frog SEO spider tool is a small desktop program (PC or Mac) which crawls websites’ links, images, CSS, script and apps from an SEO perspective. The free version will crawl up to 500 pages and will allow you to export several different SEO related reports.

4. Test your site speed on mobile devices to enhance User Experience (UX).

From what we’ve covered so far, you should realize the importance of having a website that loads quickly on mobile devices. While you can use any site audit tool out there (such as Google PageSpeed Insights, Pingdom Website Speed Test and Chrome Lighthouse), we recommend first looking into images over 100KB (export from Screaming Frog report) and Time to Interactive to improve site speed.

- Image Compressors: Images with large file sizes can be reduced without losing quality. JPEG Optimizer, Optimizilla, and plugins such as ShortPixel Image Optimizer and Compress JPEG & PNG images are all popular options.

- Serve images in next-gen formats: JPEG 2000, JPEG XR, and WebP are image formats that have superior compression and quality characteristics compared to their older JPEG and PNG counterparts.

- Time to Interactive (TTI): This web performance metric measures how long a page takes to load before the user can interact with it. The best way to improve your TTI is to remove or minify your JavaScript scripts that are delaying the page from rendering.

5. Optimize your pages with structured data markup.

Now that we’ve troubleshooted several basic indexation issues, we can move forward with even greater search engine optimization applications. Marking up your site and pages with structured data better informs web crawlers but it can also help you capture even more SERP real estate such as Knowledge Panel, Featured Snippets and People Also Ask — to name a few. A full list of different structured data types can be found at Schema.org.

- Google Structured Data Testing Tool: Either before or after applying structured data markup to your pages, you can use this handy site audit tool to test for errors or missing information.

- Google Rich Results Test: This tool reviews URLs to see which potential rich results your page is eligible for and provides previews of your rich result on Google to show how it will look on both mobile and desktop.

Congratulations! You’ve now successfully completed a basic diagnostic of your website’s technical SEO health.

As we mentioned earlier, this website SEO audit is just a starting point to uncover items that can move the needle quickly towards optimal site performance. At the end of the day, we want to make it as easy as possible for your audience to find all of the great high-quality and unique content you’ve created for them on your site. Reviewing these critical technical items on your site and outlining an SEO strategy to resolve any of the “low-hanging fruit” issues is a huge leap forward towards maximizing a return on your investment of time and resources.

Want to improve your SEO efforts today? Check out our SEO audit service and let’s chat.